Why AI is a hot topic for software development

Lately, AI and Machine Learning have been the most significant breakthroughs in the software testing space, and they will remain a hot topic for the foreseeable future.

With the evolution of tools like ChatGPT, Bard, and CodeWhisperer, companies strive to make their teams faster and avoid manual errors as much as possible. The barriers to adoption continue to get lower thanks to platform engineering - cloud-based machine learning platforms that offer scalable infrastructure and pre-built tools and frameworks for model development. Amazon and Google have recently bolstered their offerings. With that, demand for AI skills is expected to grow exponentially and drive a need for workers to learn new technical skills across industries (as shown by this higher education 2021 survey).

Primary benefits of AI in software testing are improved speed and consistency, and freed up time for more value-add activities. Apart from the potential benefits, however, there are also potential risks associated with generative AI–based tools, such as intellectual property, bias, and privacy concerns, as well as new data and regulatory challenges.

For a more detailed overview, you can learn more about ChatGPT and LLMs for software testing in our blog.

AI-powered solutions are being used in finance, retail, healthcare, manufacturing, transportation, and numerous other fields, and AI applications are only expected to grow. For software development, interesting cases can be found at each stage of the lifecycle, for example:

- Accelerating requirements, designs/mock-ups;

- Code review, documentation, and static analysis;

- Test case creation, execution, and maintenance;

- Reporting and debugging;

- Monitoring and predictive analysis for quality assurance (see Atlassian and Amazon case study as an example).

However, across these use cases, especially when it comes to creativity and medium or high complexity, accuracy and validity of results are far from perfect, so human intervention is still a must, as underscored by McKinsey and BCG case studies.

In this article, we will take a deeper dive into the role of AI in automated testing. You'll learn about:

- How AI helps with Automated Testing

1. Design

2. Maintenance

3. Execution - How AI elevates the efficacy of automated testing

How AI helps with Automated Testing

Using AI to automate testing processes can be important for organizations that want to achieve continuous delivery. By using AI to create and run tests on new code more easily, developers can quickly identify and fix issues that arise, ensuring that the code is ready for deployment as soon as possible.

We will look at two large categories of automated tests based on the creation method - whether a user records the scenario or writes it out step-by-step as a "traditional" script - and discuss the AI applications for the design and maintenance processes of both.

Design

For the first category, automated UI testing is enabled by an intelligent recorder with the machine learning engine that gets smarter with each execution based on your application data.

You may need to walk such an AI tool through your application once, or, for some of the newer products (like GPTBot, Scrapestorm, or Browse.ai), you can point them at your web app to automatically begin "spidering" it. During that process, the intelligent automation tools leverage improved OCR (Optical Character Recognition) and other image recognition techniques to map the application. That enables better identification of elements even when their locators have been changed - no need for hard coding with something like accessibility IDs. Such AI tools can also collect feature and page data to measure performance, like load times, etc.

Over time, the AI tool is building up a dataset and training your ML models for the expected patterns of your specific applications. That eventually allows you to create "simple" tests that leverage machine learning and automatically validate the visual correctness of the app based on those known patterns (without you having to specify all the assertions explicitly).

If there is a functional deviation (for instance, a page usually does not throw JavaScript errors but now does), a visual difference, or a problem running slower than average, such AI-empowered tests would be able to detect most of those issues in your software and mark them as potential bugs.

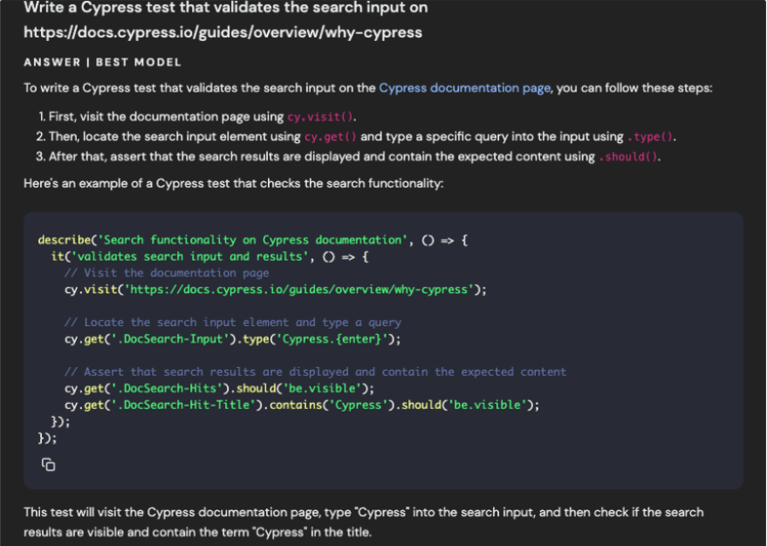

For the scripted automated testing, in the "old world," an automation engineer would need to manually add code statements for each step in a test case. Now, AI carries the ability to produce text in a variety of formats, including code snippets and annotations.

Additionally, AI platforms offer no code/low code options, which are especially useful to those who want to focus on higher-level tasks. Code can be created from structured descriptions (e.g., behavior-driven development) from non-technical audiences, such as business analysts and product managers, without having to write it manually from scratch, thus reducing time-to-development while increasing efficiency and productivity.

Tools like ChatGPT, GitHub Copilot, or Amazon CodeWhisperer can be employed for such automation scripting to boost productivity (for example, using CodeWhisperer to automate unit tests). External AI chatbot companions may need to catch up when test cases become complex or abstracted. Instead, integrated coding AI tools like Copilot are more effective, providing local, in-line feedback based on previous patterns and direct code context.

At a high level, you would:

1. Tweak LLM settings, if necessary

For instance, in ChatGPT, you can disable the chat history to control training more precisely. Phind (based on GPT 4.0) has toggles controlling the length of the answer and the preferred model to use. A “Pair Programmer” toggle allows the tool to explicitly question the user for specifics on their prompt in a back-and-forth manner.

2. Provide the initial prompt

A few tips to improve the results of this step are:

- List the exact steps a user would take for the scenario;

- Separate the sections of your prompt using the “###” syntax;

- Leverage the latest versions of models;

- Use direct, unambiguous commands;

- Be more context-specific, gradually increasing the level of detail (in addition to steps, context can involve e.g. partial source code);

- Split more complex requests into a chain of simpler prompts;

- Limit the scope of the model's output.

A few known challenges include front-end libraries like React and Angular, which abstract underlying HTML, making it extremely difficult to copy/paste code into the AI prompt. Additionally, local-only applications or hidden behind authentication layers make it impossible to provide a URL that AI can reference.

3. Verify the results and iterate

It is okay to provide lengthy responses as long as the instructions are clear and concise. The more complex a test scenario gets, the more context a prompt will need for achieving accurate results. Achieving impressive accuracy in custom environments will require fine-tuning Generative AI tools and their LLM models with large volumes of high-quality, company-specific data, of course, keeping security in mind.

Just remember the result in many real use cases, even after iterations, will be solid building blocks of an executable automated test, not the 100% finalized solution. In the current state, it will still need adjustments done by humans.

Maintenance

The brittle nature of UI automation has been a challenge for quite some time, so self-healing is one of the primary maintenance aspects that AI facilitates.

We briefly mentioned in the previous section that traditional test automation tools have specific identifiers to define the components of an application. Due to those fixed definitions, the tests start failing once components change.

Instead, artificial intelligence algorithms allow the automatic detection of unexpected issues (e.g., due to dynamic properties) and suggest a valid, more optimal alternative or automatically adjust the test case. Object recognition is a form of intelligent design that can add new elements and updated attributes to the DOM without manual effort.

On the scripted side, AI can simplify code maintenance and promote consistency across different platforms and applications (e.g., by converting functional code to different environments). Generative AI can also be used to automate code summarization and documentation - it can review code, add explanatory comments, and create output summaries and application documentation in a concise, human-readable format.

Execution

Regarding AI involvement, this aspect works similarly between the two categories. Beyond what we already mentioned, AI can help with configuring, scheduling, and triggering your tests. Also, it can assist with decision-making about the execution scope.

One of the critical questions most companies struggle with is, "What is the smallest suite of tests we should execute to validate this new code sufficiently?". AI tools can be used to answer just that - they can analyze the current test coverage, flag dependencies, and point out areas at risk.

However, it is important to understand the distinction between real technologies that are ready to create business value and those that show promise but have limitations and issues that still need to be addressed. For instance, you can enhance your testing processes with the powerful features of Xray Enterprise such as Test Case Designer and achieve a balance between manual tasks automated by intelligent algorithms and test management tools.

Embracing the future: how AI elevates the efficacy of automated testing

No matter your position in the tech stack, the promising reality is that AI is likely to make your lives easier and work - more efficiently, even if not immediately. AI-powered testing tools and techniques are already making a noticeable impact on the testing landscape, streamlining processes, promoting experimentation, and improving overall software quality.

AI must maintain human developers' and testers' creativity, intuition, and problem-solving abilities. Furthermore, the collaboration creates a powerful synergy, blending human intuition and contextual understanding with LLM's data-driven insights, enhancing testing processes' efficiency and effectiveness.

Looking further into the future, with autonomously built software, continuous testing will be critical. As the delivery lifecycle condenses, more testing will be needed than ever before. There needs to be more than automating individual tests based on acceptance criteria. AI will help design, deploy, and maintain complex test architectures, end-to-end test new functionality, continually conduct exploratory testing and execute ever-evolving regression suites.

Stay one step ahead with AI updates from the industry with our useful AI and software testing blogs.